Lately, there has been a lot of talk over a new kind of programming called vibe coding, a term introduced earlier this year by OpenAI co-founder Andrej Karpathy. It’s a programming approach that uses artificial intelligence (AI) to turn natural language descriptions into executable code.

Vibe coding has gained attention for its ability to speed up development and make coding more accessible. However, as with any emerging technology, it comes with its own set of security challenges.

In this post, we will discuss vibe coding, its potential security risks, and how SecureFlag can help mitigate those risks.

What Is Vibe Coding?

Vibe coding represents a shift in how we approach software development. Developers (and others) can use plain language to describe the functionality they need, and the AI assists in writing code.

For example, if a developer needs a login system, they can describe what they want instead of manually writing the code, and the AI will generate it. This sounds all well and good, but developers should still refine and test the AI-generated code to make sure it works as it should and there aren’t any security issues.

This new way of coding speeds things up and makes development more accessible, even for those without a technical background. But those benefits come with some major downsides, especially on the security front. As more coding gets handed off to AI, new vulnerabilities can start to find their way in.

Why Vibe Coding Is Gaining Popularity

Vibe coding is appealing for many reasons, but one of the big ones is faster development cycles, making it ideal for prototyping or testing new features. AI tools do all the work of generating code based on simple descriptions, allowing users to focus on providing guidance and ideas.

This shift simplifies development for individuals with limited technical experience, as they can describe what they want to achieve in simple terms rather than needing to master complex coding languages. But, really, it’s not that straightforward, as it comes with many security challenges.

We’ve already seen low-code and no-code platforms being used extensively, but now, with the rise in AI, even more people are turning to vibe coding without having much tech or security experience.

Security Risks Associated with Vibe Coding

The question of whether vibe coding is advancing software development or putting it in peril is a contentious one. So far, we know that it opens the door to vulnerabilities. Let’s check out the primary security risks that come with using AI for code generation:

1. Unverified AI-Generated Code

AI tools that generate code pull from large datasets. However, these systems don’t really distinguish between secure and insecure coding practices. While it’s becoming less common for AI to introduce obvious flaws like simple SQL injections, it can still produce subtle logic errors that are much harder to detect. These issues might weaken critical features, like authentication, or lead to the exposure of sensitive data.

Because AI is still learning and changing, the code written by these tools can differ a lot from traditional coding. They don’t offer the thorough review and oversight that human developers provide, which may lead to insecure implementations.

2. Risk of Over-Permissioned AI Agents

To be effective, many AI-powered coding tools require extensive permissions. These tools may need access to a developer’s codebase, version control systems, or even sensitive assets such as credentials for cloud services or other APIs.

There are already many API security risks. If someone uses vibe coding but doesn’t really understand how APIs work and the threats involved, they could expose critical assets like source code, API keys, or private credentials.

3. Lack of Transparency and Compliance Issues

AI-generated code might seem a bit unclear to developers because they might not understand how it was put together. That lack of transparency makes it difficult to check whether everything is working as it should when it comes to security standards like OWASP, GDPR, or ISO 27001.

Auditing becomes a challenge if you can’t explain how the code came to be, which makes it harder to confirm it actually meets the required security benchmarks. That’s why having the right context about the existing codebase, its structure, dependencies, and everything else is key to making sure the AI-generated parts stay compliant and secure.

4. The Perils of Trusting AI Without Human Scrutiny

Relying too heavily on AI-generated code without human oversight falls into what OWASP calls Excessive Agency, which gives the AI too much control without adequate checks. Letting code go live without proper testing and review is risky, as vulnerabilities could easily end up in production. At the end of the day, security still depends on human judgment and careful validation, no matter how convenient AI might seem.

When developers rely on AI-generated code, they might not fully understand the underlying logic. In traditional coding, the process of writing every line forces a developer to think critically about the logic. However, with vibe coding, since developers are reviewing code they didn’t write, it’s easier to overlook subtle security flaws or logic mistakes, leading to increased risk.

5. The Emerging Profile of Vibe Developers

Vibe coding may attract developers without formal training in secure coding practices. Traditional software developers are taught how to write functional code, but vibe coding opens the door to non-coders and individuals without that background.

These developers might lack an understanding of security principles, which could lead to vulnerabilities in the code they help generate. Of course, this is true for traditional codiing too, that’s why it’s so important that secure coding training takes place.

AI-assisted development just emphasizes the need for better developer security training and education for all developers, no matter their experience level.

6. Threat Modeling Often Overlooked

When development speeds up, security reviews often slow down. In the rush to get new features to production, things like code audits and threat modeling can get pushed aside. But skipping these steps, especially when AI-generated code is involved, can leave serious breaches.

Making threat modeling part of the process, no matter how fast you’re moving, is key to keeping things secure. Using automated threat modeling tools like ThreatCanavas helps with this process.

Lessons from a Real-World Incident

Earlier this year, a post on X gained traction when a “vibe coder” revealed that his SaaS platform was under attack.

The business, which had been built entirely with AI assistance and no manually written code, was facing issues such as bypassed subscriptions, overloaded API keys, and database corruption.

What made his announcement particularly striking was his follow-up statement: “As you might expect, I’m not very technical, so it’s taking me longer than usual to resolve this.”

This shows how people using vibe coding, who lack technical and security knowledge, can produce applications with many risks.

How SecureFlag Helps With Vibe Coding Security Challenges

SecureFlag can help teams build the secure coding knowledge needed to review, validate, and improve AI-generated code. By focusing on vulnerabilities and best practices, SecureFlag helps organizations ensure that the code they produce aligns with industry security standards.

Here are some of the ways SecureFlag assists in addressing vibe coding security challenges:

1. Hands-On Secure Coding Labs

SecureFlag offers interactive labs that help developers strengthen their secure coding skills in safe virtualized environments. Training becomes fun, rather than a chore, and involves finding and fixing vulnerabilities, applying secure coding principles, and testing code before deployment.

Just a beginner? Fear not. Our labs are for developers of varying skillsets and experience, including those at a beginner level who may have little secure coding experience.

2. Role-Based Learning Paths

SecureFlag’s platform also offers learning paths for specific roles to make sure that both technical and non-technical team members receive the training they need. Aside from secure coding for developers, project managers can learn about security awareness, and QA teams can improve their test design skills.

3. Threat Modeling with ThreatCanvas

SecureFlag’s ThreatCanvas platform makes threat modeling more accessible by helping teams identify and understand security risks. That’s especially useful when dealing with AI-generated code, since it helps catch issues early on, right from the design stage. ThreatCanvas enhances productivity and helps developers keep their code secure without slowing things down.

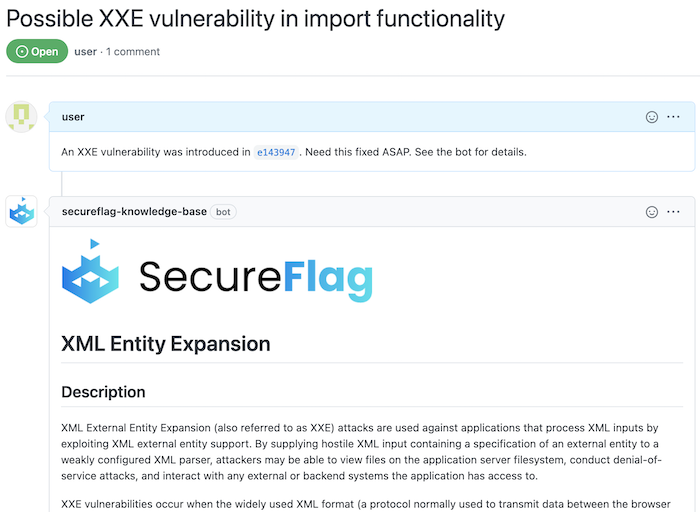

4. Integration With Vulnerability Scanners

SecureFlag integrates with popular vulnerability scanning tools using SARIF. That means when issues are flagged in the code, SecureFlag can automatically recommend hands-on training labs that walk developers through how to solve the problem and avoid similar mistakes in the future.

It’s not just about finding vulnerabilities, but helping developers understand and mitigate them with the right context. This is really useful when AI-generated code is involved, where the logic might not always be obvious right away. With this kind of integration, teams can respond faster, making secure coding part of everyday development workflows.

Build Secure Applications From the Start With SecureFlag

AI-generated code isn’t going anywhere soon, but it’s still essential to understand how it works and what security issues it may contain. SecureFlag’s training labs, learning paths, integrations, and ThreatCanvas make reducing vulnerabilities much easier.

Whether you’re working with AI-generated code, traditional coding methods, or a combination of both, SecureFlag offers the resources and support to help keep your development process secure, compliant, and ready for changing security challenges.