Kubernetes Container Orchestration!…

Now that we have your attention, let’s dig a little deeper into Kubernetes, an open-source orchestration layer that manages container-based applications.

Kubernetes security goes beyond access controls - the entire container lifecycle needs to be secured. This means knowledge of the Kubernetes security best practices and tools to help you implement.

In this article, we’ll explore some of the security challenges you’re going to face when administering Kubernetes clusters and the appropriate preventative measures to batten down the Kubernetes hatches.

What is Kubernetes security?

Think of Kubernetes as an OS for the cloud, orchestrating containers grouped in Pods and distributed across a number of servers. This dynamic setup involves the constant spinning-up and spinning-down of containers, in line with user demand for services and computing resources; thus, Kubernetes has been designed to be highly portable and extensible.

These scalable, accessible traits, combined with the initial development and propagation by one of the world’s largest online companies, have led to the adoption of Kubernetes as the ubiquitous tool in its class (Kubernetes has 80% of the container orchestrators’ market share). However, although Kubernetes allows you to coordinate computing resources of any kind and size, ensuring data safety and the security of operations on the cloud can only be achieved with relevant Kubernetes-specific knowledge and its practical application during implementation and operations.

Kubernetes security best practices and challenges

Kubernetes security best practices are vital to protect sensitive data and containerized applications. By adding a layer of security, you can prevent disruptions and data breaches. The first important step is to understand the common Kubernetes security challenges you may be up against. To help you create a robust Kubernetes security infrastructure and minimize risks, here are some important things to consider.

Know your cluster

One of the many security challenges in Kubernetes is the distributed and interoperable characteristics of containers. This property makes the task of maintaining security across the infrastructure both arduous and unending. In particular, since everything is containerised, it is very difficult to itemise all the applications on Kubernetes and identify the security risks they pose.

Still, the following simple steps can largely mitigate security concerns:

-

Maintain an asset inventory - The modern cloud is a continually morphing IT infrastructure. Having an asset inventory is a basic part of any security program and the foundation of vulnerability and patch management programs.

-

Assess the risk level of your assets - Starting from the asset inventory, determine the importance of each asset and triage your risk assessment efforts accordingly.

-

Continuously scan for vulnerabilities - As the baseline of your cloud infrastructure perennially changes with the addition of new applications and updates, fresh vulnerabilities arise. Make image scanning part of your CI/CD pipeline and generate alerts when high-severity, fixable vulnerabilities are detected. Also, implement periodical security assessments by penetration testers, ensuring they are particularly savvy in environments heavily reliant on containerisation.

Supply chains are a security nightmare

Although some third-party software can possibly offer instant solutions to your container management and business automation needs, public image registries and software repositories pose significant security risks.

Use private image registries for your cluster to reduce the risks arising from spurious third-party applications. Private registries also simplify asset management practices by enabling the control of which software runs in which environment. If you need to use vendor registries, ensure that only whitelisted sources are used.

Keep the operating system base images simple with no unnecessary components. Remove the non-essential package managers, language interpreters, and compiling tools so as not to enable a would-be attacker to build offensive capabilities and spread in your network. Make sure the images always use the latest versions of their components.

The flat network problem

By default, the Kubernetes network model is flat, meaning no network segmentation is in place. This configuration can pose a significant problem because every pod can communicate with every other pod, and a single compromised pod can be used by an attacker to move laterally within the cluster.

To address this problem, use a network plugin that firmly enforces network policies, like Weave Net, Calico, or Kube-router, and configure which groups of pods are allowed to communicate with each other.

Moreover, implementing full separation of Kubernetes clusters ensures that attackers can’t exploit lax network policies to jump across network segments.

Secure by default? Don’t be so sure…

In the interest of fast deployment and simplified management, the default settings in Kubernetes often favour simplicity over security, and unfortunately, certain older versions of Kubernetes were shipped with default settings that pose a significant number of risks.

To address this challenge, adopt the latest Kubernetes updates and configuration guidelines and periodically review the policies and settings for your cluster(s). Handy tools such as Kube-Scan or kube-hunter can be used to perform security reviews of cluster components as part of your security assessment process.

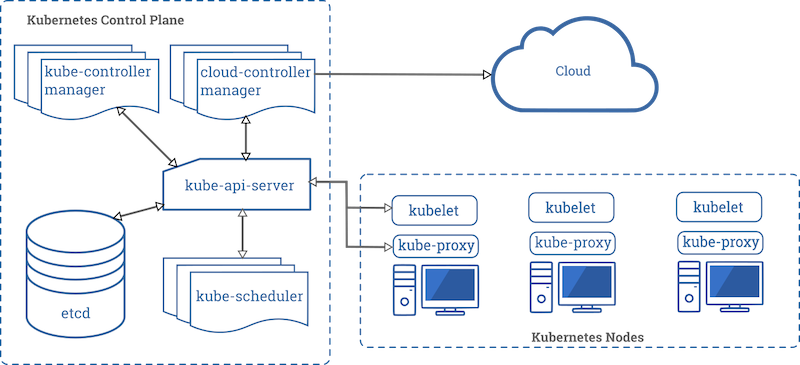

Kubernetes follows a “hub-and-spoke” API pattern to let the Control Plane and the Node components communicate with each other. For this reason, correct access and authorisation controls must be enabled in every Kubernetes component’s API as the first line of defence.

-

API Server - The pivotal point where the cluster’s security configuration is applied. All the other components are able to interact directly with the cluster’s state store via its REST API.

Kubernetes can use client certificates, bearer tokens, and other authentication mechanisms to authenticate requestors. Once authenticated, every API call is authorised by the Role-Based Access Control (RBAC) mechanism, which regulates access to resources based on the roles within your organisation. RBAC is highly configurable, and its policies must be reviewed thoroughly to achieve least privilege without leaving unintentional security holes.

-

Etcd - The key-value store contains cluster state and configurations. For this reason, getting write access to the etcd backend for the APIs is equivalent to granting cluster-admin access, and read access can be used to escalate fairly quickly.

In versions prior to etcd 2.1, authentication did not exist, but currently, it supports strong credentials such as mutual authentication via client certificates. This should always be enforced by creating a new key pair for every entity in the cluster that needs to access the etcd API.

-

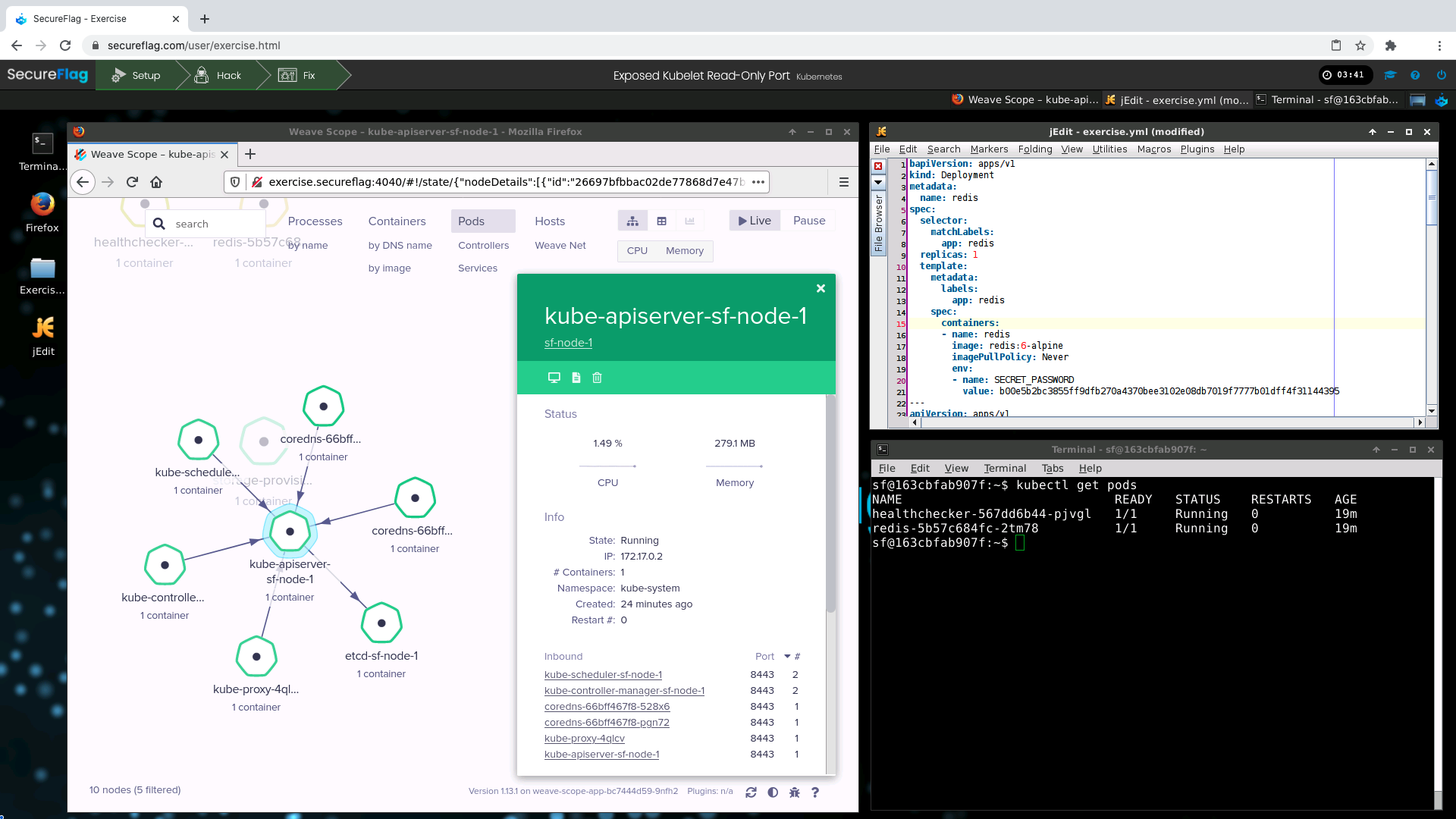

Kubelet - Kubelet is an agent running on every node that should be configured correctly to prevent unauthorised access from the pods.

In versions prior to Kubernetes 1.7, Kubelet had read-write access to numerous resources it had no responsibility for and, in the event of a compromise, could be used to compromise other clusters’ nodes. More recent versions limit Kubelet to access-only resources that are specifically related to the node when it runs.

It’s imperative to restrict access to the two Kubelet read-write and read-only REST APIs ports. While the read-only port can simply be disabled to prevent any leak of sensitive data, the read-write port can’t be fully disabled, even if the compromise could result in a take-over of the cluster.

For this reason, make sure the read-write port has disabled unauthenticated or anonymous access, and enforce authentication of all clients by requesting X.509 client certificates or authorisation tokens.

Protect your nodes

Because every node communicates with every other node in the cluster, one susceptible node has the potential to compromise the entire cluster.

To mitigate this, always apply the principle of least privilege to node access rights. Reduce and monitor access to the host access, and force developers to use “kubectl exec” when directly accessing containers instead of using SSH access.

Keep your nodes’ operating systems tidy and clean. Install security updates and patches, limit access following the principle of least privilege, monitor your system, and perform periodic security auditing on your servers.

Set boundaries

Create different namespaces and attach resources and accounts to leverage the Kubernetes design that encourages the logical separation of resources.

Use Kubernetes Authorization Plugins to define fine-grained access control rules for specific namespaces, containers and operations.

Beware the cloud metadata

Cloud platforms commonly expose metadata services to instances. These are typically provided via REST APIs and furnish information about the instance itself, such as network, storage, and temporary security credentials.

Since these APIs are accessible by pods running on an instance, these credentials can help attackers move within the cluster or even to other cloud services under the same account.

When running Kubernetes on a cloud platform, make sure you use network policies to restrict pod access to the metadata API, and also avoid using provisioning data to deliver secrets.

The Bottom Line of Kubernetes Security

Security is often overlooked in the DevOps processes, mainly due to a lack of appropriate skills, tight deadlines, and a perception that it’s not a core requirement. However, as breach after breach shows us, security, or a lack thereof, is the technological tidbit that can make or break organisations, compromise countries, grab front-page headlines, and more.

Teaching DevOps personnel how to embrace security from the start when building and maintaining a cloud-ready environment is a fundamental activity, as this approach can prevent heavy losses due to security breaches eventuating down the track.

At SecureFlag, we teach Secure DevOps practices through hands-on, practical exercises to ensure your code and infrastructure are built as robust as they can be. We know full well that good security practice can often be difficult to learn, let alone implement: that’s why we built a training platform to teach participants in real-time, dedicated Kubernetes clusters, where they learn to identify security misconfigurations and remediate them hands-on.

The platform offers 100% hands-on training, with no multiple-choice questions involved, and uses an engine able to live-test user changes, instantly displaying whether the infrastructure has been secured and awarding points upon exercise completion.

Security starts with the first keystroke - contact SecureFlag for a demo today!