Generative AI risks should be taken seriously.

The rise of generative AI tools and AI coding assistants in software development has given organizations and developers the tools to achieve levels of production and efficiency that would have been impossible to consider just a few short years ago.

But as we’ve talked about recently in our OWASP LLM Top 10 post, this new technology isn’t without its risks.

With generating code, artificial intelligence reduces the burden on developers, speeds up the development process, and even produces features that might have been time-consuming or challenging to create manually.

However, like any technological advancement, the use of generative AI in writing code is not without its limitations and security risks. This article delves into the potential drawbacks and dangers of relying too heavily on generative AI for coding and explores strategies to mitigate these risks.

What is a generative AI?

So, what exactly do we mean by generative AI?

Many people will be familiar with the latest trends and topics surrounding artificial intelligence as many businesses have adjusted to try and find ways to implement AI as either virtual assistants or as methods to automate different tasks.

You have most likely heard of GPT and maybe CoPilot, Llama, or Claude. On the surface, they accept user input and can generate an incredibly fast response, and increasingly, we see them embedded into development IDEs in the form of AI coding assistants that can generate code instantaneously.

Limits of generative AI when writing code

Code quality and contextual understanding

One of the primary concerns with AI-generated code is the quality of the output. While generative AI can produce functioning code, it might not always generate the most efficient or optimal solutions. A large problem with AI in coding environments has been producing contextually relevant code that follows the same patterns and makes use of existing functions. Whilst this is always improving, it is important to consider when rolling out to development teams.

Also important to note that AI’s suggestions are based on patterns and examples found in its training data, which might not represent the best practices or the most effective coding techniques.

For instance, an AI might suggest a solution that works but is computationally expensive or does not scale well with increased data loads. In environments where performance is critical, such inefficiencies can lead to significant issues down the line.

Creativity and innovation

Another limitation with generative AI is that it operates by identifying patterns in the data it has been trained on, which can limit its ability to produce genuinely innovative or creative solutions. While it can replicate and slightly modify existing solutions, coming up with novel approaches that significantly differ from what it has seen before is challenging.

Research published by Cornell University actually found that in scenarios where innovative problem-solving is needed, leaning too heavily on AI might stifle creativity. Developers might find themselves constrained or distracted by the AI’s suggestions, potentially missing out on more innovative and effective solutions that a human developer might think of.

Risks of generative AI when writing code

While generative AI can ease the coding burden, there are some risks to consider:

Security vulnerabilities

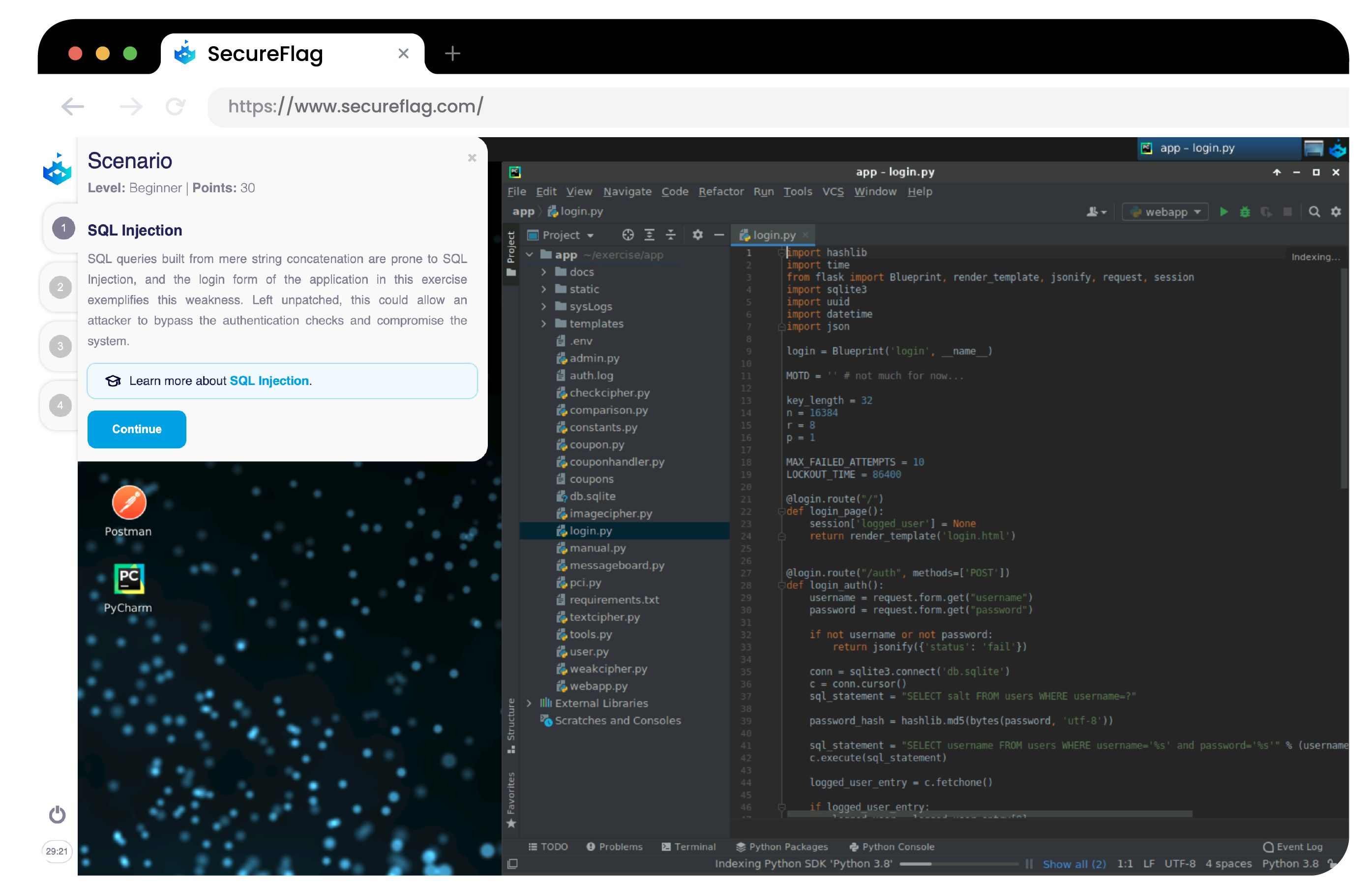

One of the most noticeable risks with AI-generated code is the potential for introducing security vulnerabilities by generating code that lacks secure coding practices, uses unsafe dependencies, or mishandles sensitive data.

-

Unsafe Dependencies: AI tools can heavily rely on open-source code to generate code, which can, in turn, introduce insecure libraries or outdated dependencies that haven’t been reviewed for vulnerabilities. The AI’s knowledge is also limited to information available up to its last training date, meaning that some generated code might have been proven insecure, but it’s not included in its data yet.

-

Data Leaks: LLM-powered coding assistants with access to an organization’s internal data are becoming increasingly prevalent as they seek to take advantage of AI’s capabilities to speed up development. Because of this, it can inadvertently expose sensitive information, such as API keys, credentials, or proprietary algorithms, especially if it’s sending your data back to the AI provider’s servers for processing.

How to mitigate this generative AI risk: Implement a strong code review process for catching insecure practices. Use AI responsibly by ensuring it’s using up-to-date components and taking steps to minimize unnecessary data exposure, such as removing hardcoded secrets and keys in code, even during local development.

Decreased developer understanding of code

AI-generated code can create a situation where developers rely so heavily on auto-generated solutions that they do not fully understand them. This lack of deep comprehension increases the risk of “spaghetti code”—disorganized, unmanageable, and difficult to maintain.

- Loss of Understanding: When developers don’t fully understand the code, they may struggle to debug or modify it later, making the codebase harder to maintain and more difficult to integrate new features.

How to mitigate this generative AI risk: Encourage developers to continuously review and refactor AI-generated code rather than using it as-is. Ensure they know the underlying logic and structure to maintain code quality.

Intellectual Property violations

A big generative AI risk is intellectual property violations. AI coding assistants are trained on massive datasets, including open-source and proprietary code, but just because a project or code is open-source does not mean that it can be freely used without limits. This can create risks of inadvertently copying code that is under restrictive licenses, leading to intellectual property violations.

-

Risk of Using Infringing Code: Developers may unknowingly include copyrighted code in their projects, leading to legal issues if a product or feature is released using it.

-

Lack of License Awareness: AI models don’t usually provide enough context on the licensing requirements of the code they generate, leaving developers to fend for themselves about what is safe to use.

How to mitigate this generative AI risk: Developers should take responsibility for verifying the licenses of any code suggested by AI assistants. Tools that automatically check for license compliance can help reduce this risk.

Code review challenges with AI-generated code

Reviewing AI-generated code adds another layer of complexity to the code review process,and therefore, is an important generative AI risk to consider. Teams may not know how to best evaluate code produced by AI, especially if it diverges from typical coding patterns or introduces unconventional approaches.

-

New Code Review Skillset: Developers must learn how to assess AI-generated code effectively. AI may suggest patterns or logic that are syntactically correct but not optimized for security or performance.

-

Need for Deeper Audits: Since AI may miss context-specific risks, human reviewers need to be extra vigilant, especially in areas involving sensitive data or critical logic.

How to mitigate this generative AI risk Incorporate specialized code review processes tailored to AI-generated code. Ensure teams are trained in identifying unique risks associated with automated code generation.

Training data poisoning

AI models are only as good as the data they’re trained on. Malicious actors can manipulate training data to introduce vulnerabilities, a process known as training data poisoning. This can lead to the AI generating code that contains backdoors or other weaknesses.

How to mitigate this generative AI risk: You should implement robust data validation and QA processes to detect poisoning in training data, as well as regular audits to verify the integrity of the supply chain from which the data is sourced.

Generative AI risk mitigation strategies

To leverage the benefits of generative AI while minimizing the associated risks, organizations should adopt a multifaceted approach that includes code review, security testing, education, and controlled use cases.

Education and training

Developers should be educated on the potential risks and limitations of using AI-generated code. Secure coding training programs such as SecureFlag’s virtual labs can help developers understand how to review and integrate AI-generated code effectively and identify and mitigate security risks.

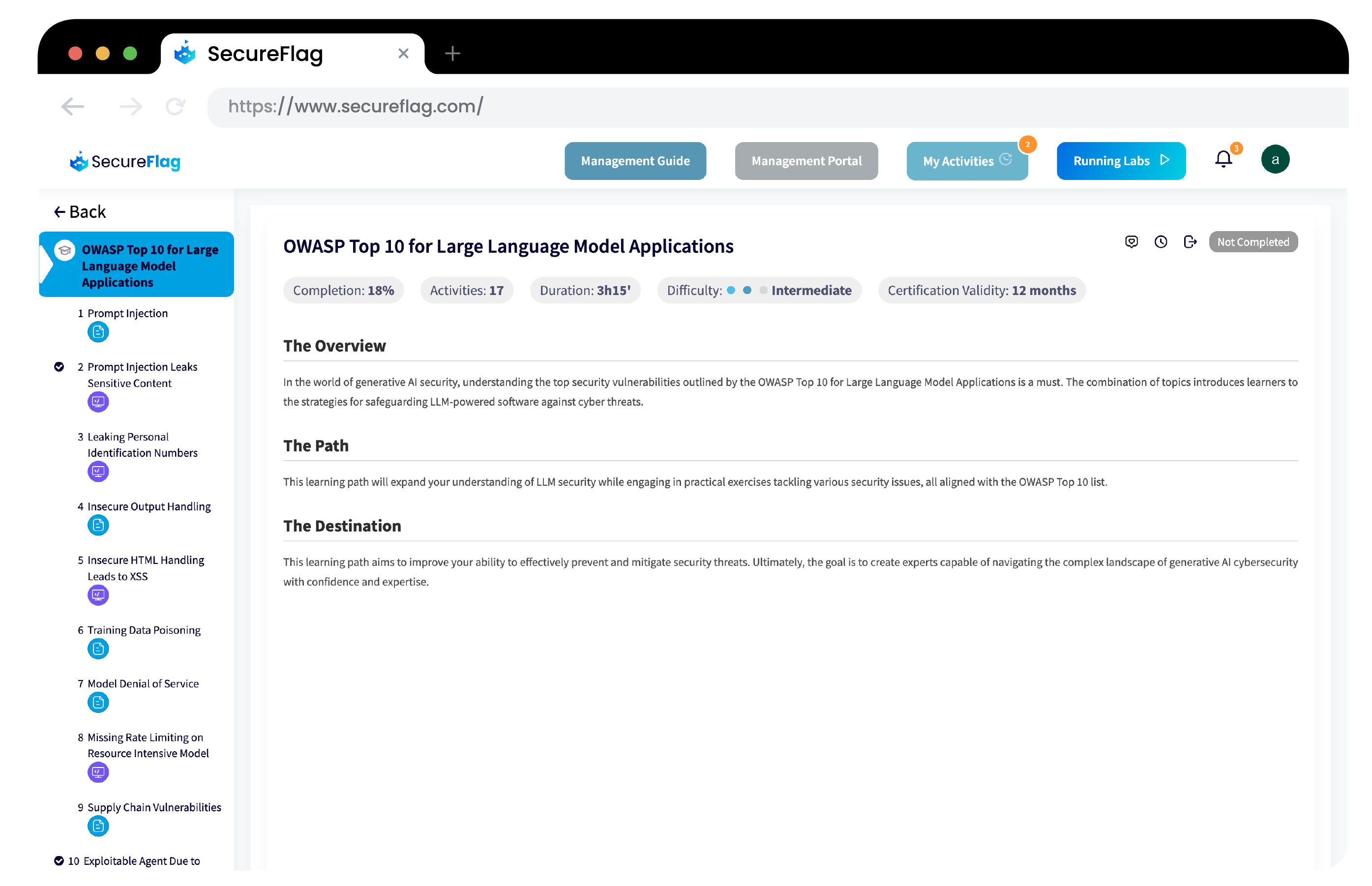

If you’re building LLM-based applications, it’s important to train your team on securing them properly. A great way to start is by following the OWASP Top 10 for Large Language Model Applications, which covers key security risks. SecureFlag also offers a practical Learning Path to help your team apply these best practices effectively.

Controlled use cases

Organizations should consider starting with non-critical systems when integrating AI-generated code. By initially applying AI to less critical components, organizations can evaluate the effectiveness and security of the AI’s output without exposing core systems to undue risk.

Incremental integration of AI-generated features into the codebase, accompanied by continuous monitoring and testing, can help identify and address issues early. This cautious approach allows organizations to build confidence in the AI’s capabilities while maintaining overall system security.

Generative AI holds huge promise for accelerating software development and introducing new features. But, limitations and security risks to generative AI must be carefully managed to ensure that the benefits outweigh the potential downsides.

By adopting strategies such as thorough code review, comprehensive security testing, ongoing education, and controlled use cases, organizations can use the power of generative AI while minimizing the associated risks.