Research shows that by 2028, 75% of enterprise software engineers will use AI code assistants. While large language models (LLMs) have some great capabilities, they also bring with them security risks that are often unexpected or hard to detect.

That’s why the 2025 OWASP Top 10 is so valuable, as it highlights how systems are being targeted and what developers can do about it. We look at the latest risks and how SecureFlag helps address them.

About OWASP

Here’s a brief run-down for those who might not know what OWASP is.

OWASP, which stands for the Open Worldwide Application Security Project, is a nonprofit organization dedicated to improving software security. It is well-known for creating widely used open-source resources, such as the OWASP Top 10 lists, which cover APIs, IoT devices, and LLMs.

These resources are developed through global collaboration with security professionals and are freely available to help anyone enhance their security practices. OWASP’s reputation makes it a trusted authority in the field.

What Are Large Language Models?

LLMs are machine learning systems trained on massive volumes of content such as books, websites, documentation, and even code. They predict text based on a prompt and can generate anything from simple sentences to multi-paragraph explanations or functional source codes.

They’re super useful, but also complex. And because they operate on statistical probabilities, they can behave in ways developers don’t expect, especially when facing input crafted with malicious intent.

When you combine that unpredictability with integrations into business processes, APIs, and user-facing systems, you get a new class of security concerns, and that’s why there’s a need for a dedicated Top 10.

OWASP Top 10 for LLM Applications (2025)

1. Prompt Injection

This is still the biggest issue in LLM security, and it’s not going away anytime soon. Prompt injection and jailbreaking happen when an attacker crafts input that changes how the model responds.

Prompt injection can bypass safeguards, manipulate responses, and even trick the model into executing tasks it shouldn’t. OWASP breaks this into direct, indirect, and even multimodal prompt injections (yep, attackers are now hiding instructions inside images).

OWASP notes that effective protection relies on layered defenses and ongoing testing. This includes:

-

Restricting the model’s capabilities.

-

Enforcing tightly scoped and well-defined prompts.

-

Incorporating human oversight for sensitive actions.

-

Implementing machine learning-based prompt and output guards to detect and block malicious inputs or harmful responses.

2. Sensitive Information Disclosure

LLMs sometimes “remember” things they shouldn’t. That could be training data, internal prompts, or previous user sessions.

OWASP flags examples where models accidentally reveal:

-

API keys

-

Credentials

-

Private user data

-

Backend logic from the system prompts

Reduce the risk by keeping sensitive data out of prompts and training sets, setting proper session boundaries, and watching for signs of internal data leakage.

3. Supply Chain Vulnerabilities

Many LLM applications rely on third-party models, plug-ins, datasets, and integrations. If any of those components are compromised, the whole system could be at risk.

For example, fine-tuning a chatbot using a public dataset that’s been manipulated or relying on a model from an untrusted source. Even tools like embedding libraries or APIs used for running the model can let attackers in.

Package hallucination, or “slopsquatting”, occurs when the code generated by an LLM recommends or references a fictitious package for developers to integrate.

Just like with traditional software supply chains, carefully vet all sources and dependencies, including external live sources queried by agents or tools, and isolate anything you do not fully control.

4. Data and Model Poisoning

It’s possible for attackers to tamper with the data used to train or fine-tune a model so they can influence the model’s behavior under specific conditions. This is often done in ways that are hard to detect.

For instance, a poisoned dataset might cause the model to give biased or malicious outputs when prompted a certain way. Worse, this kind of manipulation can hide in plain sight.

To prevent poisoning, only use trusted datasets, review the fine-tuning process, and check for anomalies in the model’s behavior after updates.

5. Improper Output Handling

It’s easy to assume that if an LLM says something, it should be fine to use. But that’s risky. LLMs can hallucinate facts (confident-sounding responses that are simply incorrect), generate insecure code, or produce improper outputs that cause issues when passed directly into other systems.

If an application uses an LLM to suggest code without validation, it can introduce new vulnerabilities.

LLM outputs are often processed by other applications downstream, such as being rendered in a browser or executed in scripts, which can lead to well-known vulnerabilities like cross-site scripting (XSS), command injection, and other input-based attacks.

It’s best to treat LLM output like untrusted input, which means sanitizing, verifying, and reviewing it before using it.

6. Excessive Agency

More applications are embedding LLMs into AI agents, which are autonomous systems capable of sending emails, calling APIs, executing code, or modifying databases without human oversight.

This is where Excessive Agency becomes dangerous. The more autonomy a model has, the more damage an attacker can cause if they manage to take control, sometimes through prompt injection attacks.

OWASP recommends limiting what the model can access, using code and not prompts for sensitive tasks, and adding human approval for anything important.

7. System Prompt Leakage

System prompts, or the hidden instructions that guide a model’s behavior, were once thought to be private. But attackers have figured out how to see them, often using indirect prompts or clever wording.

Prompts can leak through misconfiguration or prompt injection, letting attackers gain insight into how the system works or even find ways to override its rules. When prompts are exposed, they can give attackers a way to bypass the model’s safeguards.

Treat system prompts as if they could become public, separate any sensitive data from the prompt, and use machine learning-based prompt and output guards to prevent leakage or exploitation.

8. Vector and Embedding Weaknesses

Applications that use embeddings, especially in Retrieval-Augmented Generation (RAG), rely on similarity searches to retrieve documents that are most relevant to a user’s query.

These searches compare the semantic meaning of the input against vectors stored in a database, retrieving the ones that are most similar based on their embeddings.

But if someone adds harmful or misleading content into that vector store, the model might pull it in and treat it as reliable.

This risk grows when embeddings are automatically created and not reviewed. Poisoned content might appear highly relevant based on similarity scores, even if it’s incorrect or intentionally malicious.

A robust defense includes cleaning and reviewing the indexed data, as well as adding checks or filters between the vector database and the model to catch unsafe content before it’s used.

9. Misinformation

This one’s less about security and more about integrity, but it still matters. LLMs can hallucinate answers, cite fake sources, or reinforce biases. This can be disastrous in the wrong context (e.g., medical advice, financial tools, legal chatbots).

Controls such as response verification, RAG validation, and human review are crucial in preventing misinformation from becoming a business risk.

10. Unbounded Consumption

LLMs are resource-hungry, and if inputs aren’t properly constrained, attackers (or just careless users) can flood your system with huge prompts, endless loops, or tasks that rack up massive API bills.

This isn’t just about outages, it’s about cost, stability, and availability.

Rate limits, timeouts, prompt size caps, and monitoring tools are your best friends here. Don’t wait for your cloud bill to be the first warning sign.

How SecureFlag Helps Secure LLM Applications

Knowing the risks is one thing, but addressing them is another, and that’s where SecureFlag can help. With a platform purpose-built to help development teams upskill and apply best practices for securing LLM applications.

Hands-On Labs for LLM Risks

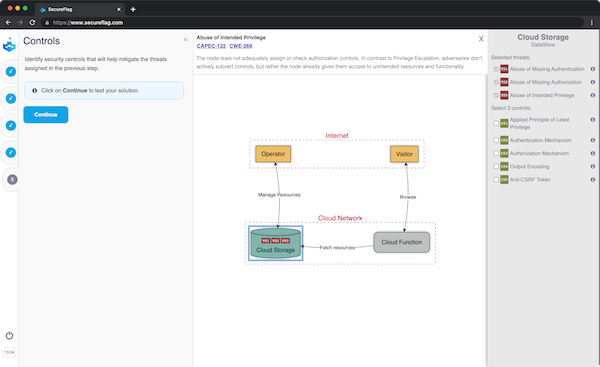

Developers learn best by doing, rather than just learning theory. Our interactive labs guide users through scenarios in the OWASP Top 10, such as prompt injection, data leakage, vector poisoning, and insecure output handling. These aren’t abstract demos; they reflect real-world setups where things often go wrong.

Guided Learning Path for Generative AI

This learning path offers practical, LLM-focused security training. It guides users through fixing vulnerabilities from the OWASP Top 10 for LLM Applications, with hands-on exercises that cover some of the most common vulnerabilities seen in the wild.

The path also explores new risks associated with agentic AI and the importance of securing Model Context Protocol (MCP) components. As these systems become more common, understanding how to secure them is quickly becoming a necessity.

Ongoing Learning and Team Readiness

SecureFlag helps teams stay up to date with new content, updated labs, and curated challenges that reflect the latest in generative AI risk.

Your team might only be experimenting with LLMs, or maybe you’re already scaling them across production environments. Whatever the case, SecureFlag provides the hands-on training, tools, and practical experience to make sure security isn’t left behind.

Interested in seeing SecureFlag in action? Book a free demo!